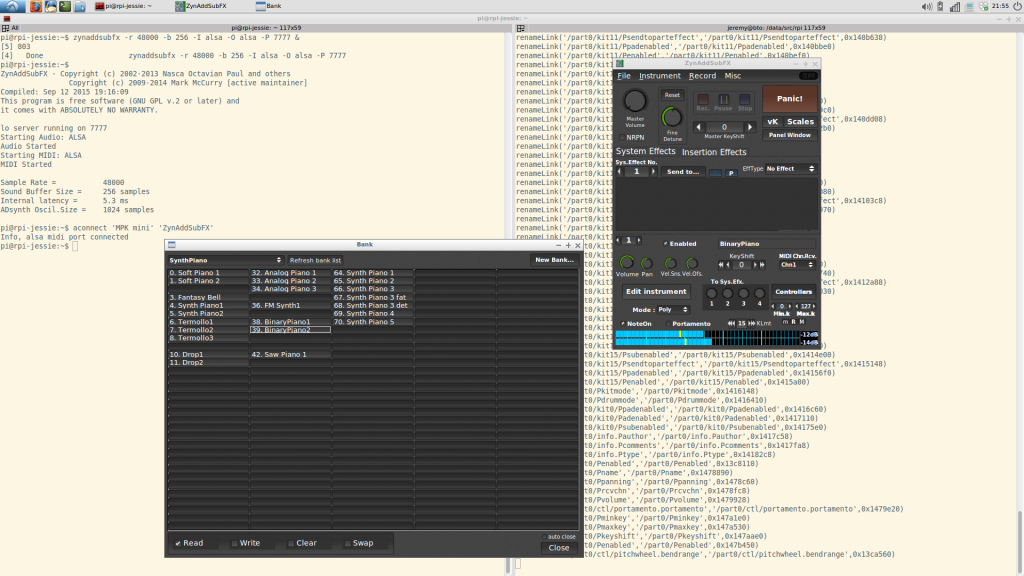

As a beta tester for MOD I thought it would be cool to play around with netJACK which is supported on the MOD Duo. The MOD Duo can run as a JACK master and you can connect any JACK slave to it as long as it runs a recent version of JACK2. This opens a plethora of possibilities of course. I’m thinking about building a kind of sidecar device to offload some stuff to using netJACK, think of synths like ZynAddSubFX or other CPU greedy plugins like fat1.lv2. But more on that in a later blog post.

So first I need to set up a sidecar device and I sacrificed one of my RPi’s for that, an RPi 3. Flashed an SD card with Raspbian Jessie Lite and started to do some research on the status of real time kernels and the Raspberry Pi because I’d like to use a real time kernel to get sub 5ms system latency. I compiled real time kernels for the RPi before but you had to jump through some hoops to get those running so I hoped things would have improved somewhat. Well, that’s not the case so after having compiled a first real time kernel the RPi froze as soon as I tried to runapt-get install rt-tests. After having applied a patch to fix how the RPi folks implemented the FIQ system the kernel compiled without issues:

Linux raspberrypi 4.9.33-rt23-v7+ #2 SMP PREEMPT RT Sun Jun 25 09:45:58 CEST 2017 armv7l GNU/Linux

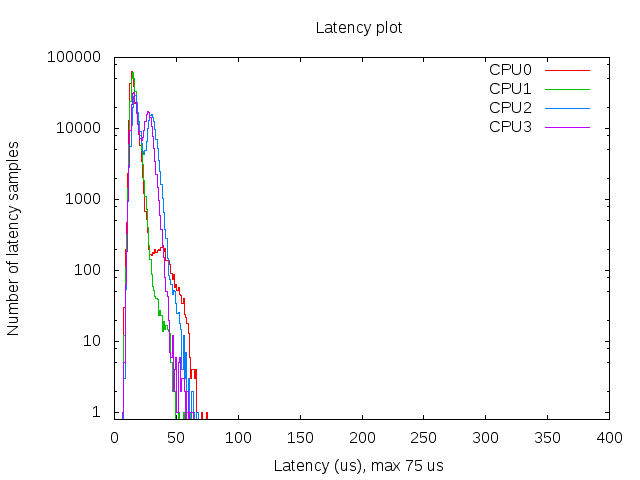

And the RPi seems to run stable with acceptable latencies:

So that’s a maximum latency of 75 µs, not bad. I also spotted some higher values around 100 but that’s still okay for this project. The histogram was created with mklatencyplot.bash. I used a different invocation of cyclictest though:

cyclictest -Sm -p 80 -n -i 500 -l 300000

And I ran hackbench in the background to create some load on the RPi:

(while true; do hackbench > /dev/null; done) &

Compiling a real time kernel for the RPi is still not a trivial thing to do and it doesn’t help that the few howto’s on the interwebs are mostly copy-paste work, incomplete and contain routines that are unclear or even unnecessary. One thing that struck me too is that the howto’s about building kernels for RPi’s running Raspbian don’t mention the make deb-pkg routine to build a real time kernel. This will create deb packages that are just so much easier to transfer and install then rsync’ing the kernel image and modules. Let’s break down how I built a real time kernel for the RPi 3.

First you’ll need to git clone the Raspberry Pi kernel repository:

git clone -b 'rpi-4.9.y' --depth 1 https://github.com/raspberrypi/linux.git

This will only clone the rpi-4.9.y branch into a directory called linux without any history so you’re not pulling in hundreds of megs of data. You will also need to clone the tools repository which contains the compiler we need to build a kernel for the Raspberry Pi:

git clone https://github.com/raspberrypi/tools.git

This will end up in the tools directory. Next step is setting some environment variables so subsequent make commands pick those up:

export KERNEL=kernel7 export ARCH=arm export CROSS_COMPILE=/path/to/tools/arm-bcm2708/gcc-linaro-arm-linux-gnueabihf-raspbian/bin/arm-linux-gnueabihf- export CONCURRENCY_LEVEL=$(nproc)

The KERNEL variable is needed to create the initial kernel config. The ARCH variable is to indicate which architecture should be used. The CROSS_COMPILE variable indicates where the compiler can be found. The CONCURRENCY_LEVEL variable is set to the number of cores to speed up certain make routines like cleaning up or installing the modules (not the number of jobs, that is done with the -j option of make).

Now that the environment variables are set we can create the initial kernel config:

cd linux make bcm2709_defconfig

This will create a .config inside the linux directory that holds the initial kernel configuration. Now download the real time patch set and apply it:

cd .. wget https://www.kernel.org/pub/linux/kernel/projects/rt/4.9/patch-4.9.33-rt23.patch.xz cd linux xzcat ../patch-4.9.33-rt23.patch.xz | patch -p1

Most howto’s now continue with building the kernel but that will result in a kernel that will freeze your RPi because of the FIQ system implementation that causes lock ups of the RPi when using threaded interrupts which is the case with real time kernels. That part needs to be patched so download the patch and dry-run it:

cd .. wget https://www.osadl.org/monitoring/patches/rbs3s/usb-dwc_otg-fix-system-lockup-when-interrupts-are-threaded.patch cd linux patch -i ../usb-dwc_otg-fix-system-lockup-when-interrupts-are-threaded.patch -p1 --dry-run

You will notice one hunk will fail, you will have to add that stanza manually so note which hunk it is for which file and at which line it should be added. Now apply the patch:

patch -i ../usb-dwc_otg-fix-system-lockup-when-interrupts-are-threaded.patch -p1

And add the failed hunk manually with your favorite editor. With the FIQ patch in place we’re almost set for compiling the kernel but before we can move on to that step we need to modify the kernel configuration to enable the real time patch set. I prefer doing that with make menuconfig. You will need the libncurses5-dev package to run this commando so install that with apt-get install libncurses5-dev. Then select Kernel Features - Preemption Model - Fully Preemptible Kernel (RT) and select Exit twice. If you’re asked if you want to save your config then confirm. In the Kernel features menu you could also set the the timer frequency to 1000 Hz if you wish, apparently this could improve USB throughput on the RPi (unconfirmed, needs reference). For real time audio and MIDI this setting is irrelevant nowadays though as almost all audio and MIDI applications use the hr-timer module which has a way higher resolution.

With our configuration saved we can start compiling. Clean up first, then disable some debugging options which could cause some overhead, compile the kernel and finally create ready to install deb packages:

make clean scripts/config --disable DEBUG_INFO make -j$(nproc) deb-pkg

Sit back, enjoy a cuppa and when building has finished without errors deb packages should be created in the directory above the linux one. Copy the deb packages to your RPi and install them on the RPi with dpkg -i. Open up /boot/config.txt and add the following line to it:

kernel=vmlinuz-4.9.33-rt23-v7+

Now reboot your RPi and it should boot with the realtime kernel. You can check with uname -a:

Linux raspberrypi 4.9.33-rt23-v7+ #2 SMP PREEMPT RT Sun Jun 25 09:45:58 CEST 2017 armv7l GNU/Linux

Since Rasbian uses almost the same kernel source as the one we just built it is not necessary to copy any dtb files. Also running mkknlimg is not necessary anymore, the RPi boot process can handle vmlinuz files just fine.

The basis of the sidecar unit is now done. Next up is tweaking the OS and setting up netJACK.

Edit: there’s a thread on LinuxMusicians referring to this article which already contains some very useful additional information.